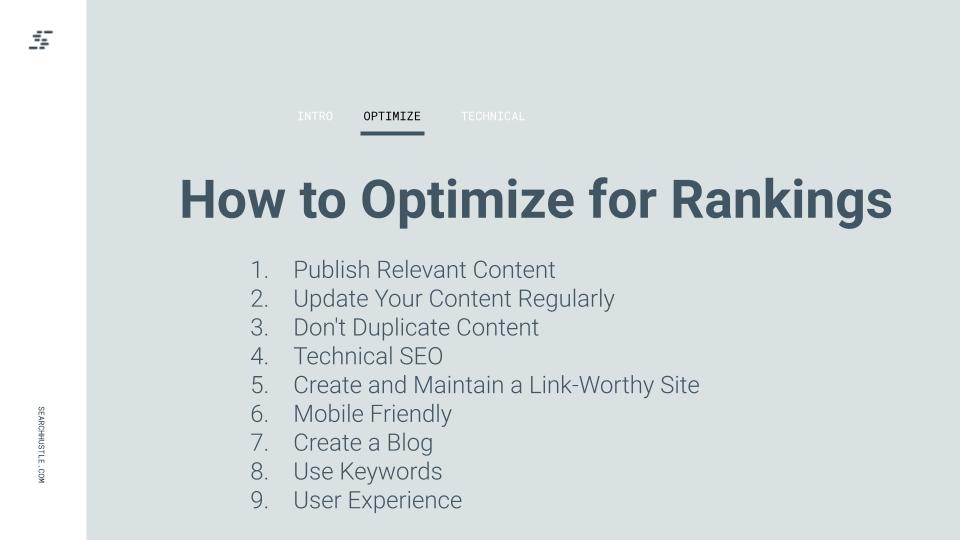

Optimize Rankings

Gone are the days when you could just create a one-page website in Wix with three paragraphs of content and a phone number, and still generate leads. For a website to be productive, valuable, and worth having, optimizing its search engine performance is paramount.

Why is optimizing rankings important?

Optimizing a site for search engines isn’t just a hip thing to do. It’s not another trendy marketing scheme that promises big dividends but ends up wasting your time and money – at least, not if you do a good job at it. Search engine optimization done right can directly push your sales through the roof, open up new markets, and give you a major leg up on the competition.

As we discussed in the previous section, the click-through rate on a search engine result page drops from 28.5% for the first result to 15.7% for the second result. It nose-dives from there to only 2.5% for the tenth result, and hardly anyone goes to page two.

That means that you could potentially more than double your click-through rate and in turn hopefully your leads and sales simply by optimizing a web page and moving it up a few spots in the SERPs.

Publish Relevant Content

The first and primary step to optimizing a site is publishing quality content. What does that mean? Relevance and readability, first and foremost. A site needs to stay on topic and get to the point of what it is that searchers are looking for. It also needs to be written in the language of the target audience. Don’t write about what you don’t know about. Before you ever write a blog or service page for your website, make sure you’re an expert in that field. Conduct ample research into keywords and the lingo in that space.

Each piece of content on a website should be written with a specific purpose in mind, to achieve a specific goal, and it should be strategically written to attract your target audience, to elicit shares and backlinks, and to generate leads.

Update Your Content Regularly

It’s not enough to simply write new content and never touch the existing pages. Content should be frequently revisited to keep it relevant. In SEO, for example, things are constantly changing based on new technology, algorithm updates, and new insights into best practices. An SEO company should revisit their blogs every so often to make sure that the info is still accurate.

Why? For one, authority. If searchers are looking at this site and only finding outdated info, they will doubt our authority and be less likely to utilize our services. Secondly, it directly impacts bounce rates and dwell time. As soon as a searcher realizes that an article has not been updated in months or years, they’re going to go back to Google and find a more relevant post on that subject. Google’s algorithm analyzes bounce rates and dwell times to ensure that they’re only ranking helpful pages that provide valuable information. As your competitors publish newer posts on the same subjects, Google will move them up in the rankings as your data ages.

It’s not just SEO companies that have to worry about this. It’s every industry. Airlines fly new planes, exterminators use new products, wiki sites have new information, and sports teams are constantly playing games. Keep the info as up to date as possible to make sure that it’s continually offering value to the readers, so they dwell longer, come back more often, and your ranking improves, all in hopes of converting traffic into leads.

Don’t Duplicate Content

Content is king, but it has to be good content. Duplicate content can kind of get a site “penalized.”

This is common with franchise sites. A company with numerous locations could be tempted to geomodify and reuse the same content. However, when Google sees that all of the location pages have nearly identical content, it could be confused as to which pages to index and which to ignore.

It’s not technically a penalty, but it can hurt your organic ranking. Adding a /locations/ tag to the URL can help with this, and geomodifying each page with the city names can also help, but ultimately it’s best to have unique content on each page (as much as possible – or at least written by a human).

Oxi Fresh Carpet Cleaning is a great example of a well-built site. Nozak Consulting built unique service pages for over 700 locations, radically improving their organic traffic and sales in just a few months.

Technical SEO

While content is written primarily for humans, with a little bit of bot optimization, technical SEO is all about the bots. Technical SEO improvements can help a website rise quite quickly in the SERPs. It’s the process of optimizing a site to make the crawling and indexing processes easier, and to create a web structure that is friendly to search engines.

Why is technical SEO so important? No matter how good your content is, if the architecture of your site is garbage, you’re making ranking harder than it should be. If Google can’t find your page, crawl it, and index it, then you will not see the organic traffic gains that are possible.

Technical SEO is called “technical” because it’s…well…technical. If anybody could do it, it would be called beginner’s SEO. If you’re naturally gifted with computers and have experience in coding – great! Odds are, you’re going to need a front-end developer to handle these aspects of your site or at least someone comfortable with HTML/CSS, or minimally someone confident in learning how to make fixes to the website.

Let’s start with the basics

An organized site structure is paramount. Every piece of content should fit somewhere within a predetermined hierarchy (content research). Visual Site Mapper is a great tool for looking at the structure of your site and comparing it to competitors.

URL structure is also a fundamental aspect of site building that almost anyone can do (researching skills required). For local companies, URLs should utilize local structure (geo modifications) that is consistent across the site. Creating consistently named URLs helps bots to understand the layout, which in turn can reward you on the SERPs.

Consider a blog site about home improvement. If half of the blogs are about DIY projects and half are product reviews, putting those categories/tags in the site structure can pay dividends. www.homeimprovement.com/guides/chain-link-fencing and www.homeimprovement.com/products/chain-link-fencing are going to be more valuable than www.homeimprovement.com/blog/guide-to-chain-link-fencing. This structure will give Google an understanding of how each individual page contributes to the overall site hierarchy. A quality site structure could even lead to Google rewarding you with sitelinks.

Certainly we would not aim for a service page, guide, & product page all competing with each other. When we see this happening we use GSC as a litmus test to improve all three resources to stand on their own keyword cloud/search intent matches. Be mindful of what/how the service pages are built (monitor GSC for feedback), how the product page is presented with content (monitor GSC for feedback), and any complete guides we have written and how they compete for keywords so we aren’t creating confusion for the bots on when and where our resources should be presented in the SERPs. There are writing techniques that can help you produce these three types of content assets and they work together, not against each other.

Next comes indexing

If Google can’t index your site, it won’t show searchers your site. Use Google Search Console to generate a coverage report and look for pages with errors or warnings. After fixing all of the errors, it’s time to break out Screaming Frog.

Screaming Frog can find broken links, analyze page titles and meta data, generate XML sitemaps, discover duplicate content, audit redirects, visualize site architecture, review meta robots and directives, audit hreflang attributes, perform spelling and grammar checks, and more. It’s the ace up a developer’s sleeve.

Ahrefs also offers SEO site audits and assigns you a technical SEO health score. It can identify page-by-page loading speeds, issues with HTML tags, and more.

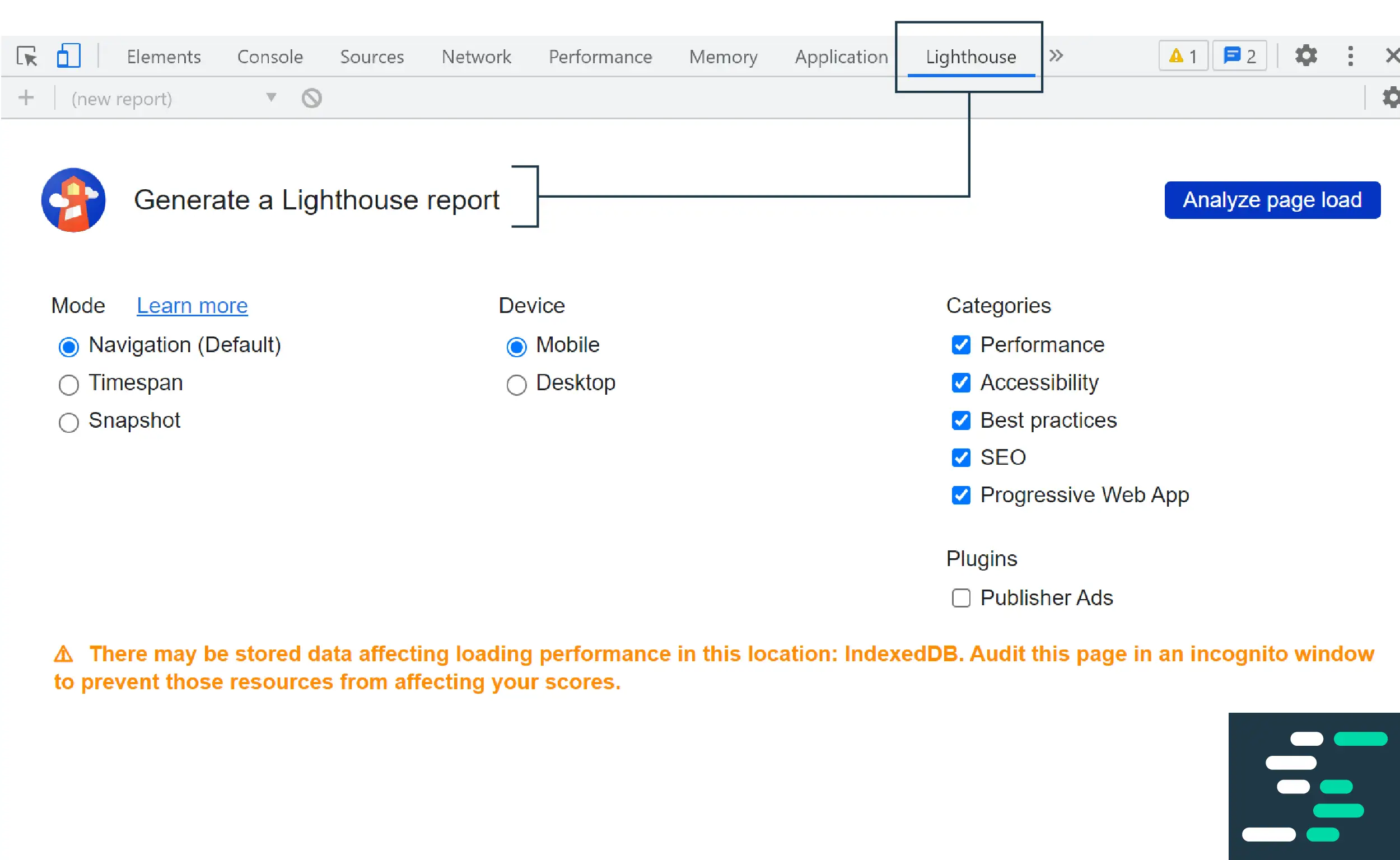

And don’t forget Google Lighthouse which gives you another set of SEO barometers to make sure you are creating a web development that sees favoritism and not penalties from the engines.

The bigger a site is, the more redundancy audits it needs, so all of these tools should be utilized to make sure nothing slips through the cracks.

If Google can’t index your site, it won’t show searchers your site. Use Google Search Console to generate a coverage report and look for pages with errors or warnings. After fixing all of the errors, it’s time to break out Screaming Frog.

Screaming Frog can find broken links, analyze page titles and meta data, generate XML sitemaps, discover duplicate content, audit redirects, visualize site architecture, review meta robots and directives, audit hreflang attributes, perform spelling and grammar checks, and more. It’s the ace up a developer’s sleeve.

Ahrefs also offers SEO site audits and assigns you a technical SEO health score. It can identify page-by-page loading speeds, issues with HTML tags, and more.

And don’t forget Google Lighthouse which gives you another set of SEO barometers to make sure you are creating a web development that sees favoritism and not penalties from the engines.

The bigger a site is, the more redundancy audits it needs, so all of these tools should be utilized to make sure nothing slips through the cracks.

Let’s talk about the three-click rule. It was once a predominant school of thought that a person should only need three mouse clicks to get to the information they’re looking for. Any more than that, and they will become frustrated and leave the site.

The three-click rule has been widely disproven and now developers use more of a reminder to keep the usability of their properties as easy as possible. The focus should be on making the site as user friendly and not on some click number. For instance, sites that have thousands of products to browse through should offer filters that narrow the field down and allow the user to locate exactly what they need.

Don’t build a site that’s confusing to navigate and leaves readers unable to locate valuable information. The number of clicks is irrelevant, so long as the searcher is actively narrowing the field and getting closer to what they need.

Internal linking is a crucial form of technical SEO that allows you to lend authority from your more authoritative pages to the pages that are less authoritative.

Don’t accumulate dead links!

Internal linking is important and it needs to be maintained. Broken internal links can ding an SEO score and mess with usability & crawlability, so when your team deletes pages or restructures URLs, make sure dead links or redirects are maintained and documented. Screaming Frog, Ahrefs, SEMrush, GSC can all identify broken links in their crawls.

How do you create an XML sitemap?

XML sitemaps remain the second-most important source for finding URLs, according to Google rep Gary Illyes.

Fortunately, it doesn’t necessarily take expertise in coding. www.XML-sitemaps.com will crawl your site and create a sitemap for you that can then be embedded into the domain root folder of your site. Then open Search Console and add the sitemap URL.

Or, if a site is built in WordPress, the Yoast SEO Plugin can create a sitemap file automatically.

Once a sitemap is live, check the Sitemaps index page in Search Console to make sure it was successful. Individual URLs can also be inspected in the Search Console to ensure that the page is indexing correctly.

Use Schema to create Rich Snippets

Take a look at these two snippets in Google. Which is more enticing?

Rich snippets show extra content in the SERP such as reviews, prices, publication dates, etc. Structured data can create a more enticing SERP result that can increase organic CTR. Try Schema.org to browse relevant Schema templates.

Improve Thin and Duplicate Content

A couple of short, uninformative sentences are not enough to compete for tough keywords. Pages need to be robust and valuable. The Raven Tools site auditor or Siteliner can identify pages with a low word count so you can beef them up.

It can also identify pages with duplicate content, both internal and external. External – meaning that page copy came from elsewhere on the internet. This is a no-no because Google already knows which is the original page, and hypothetically has indexed the original source and duplicates are far less valuable (there are some instances where this can provide value – but it will always be secondary to an original piece).

This can commonly affect ecommerce sites that sell products with product descriptions that are available elsewhere on the net. If Walmart, Target, K-Mart, and other sites are all using the original manufacturer’s description of a product, they’ll have a tough time competing for that item. Rewriting product descriptions can give you a leg up.

There’s also internal duplicate content in common areas. You generally don’t want two pages competing for the same SERPs. Geomodifying and writing originals can help with multi-location (franchise) websites that want to keep the way a service/product is talked about within a certain voice while also producing original content.

Some pages just aren’t SEO hungry or have a different purpose than ranking in search engines so these pages don’t need to be found in the SERPs and therefore SERP optimization of these pages isn’t important – we certainly would optimize these pages to fulfil their purpose.

Now let’s talk Page Speed

Improving the load times of pages and images will affect a site’s ranking in the SERPs. A terrible page that loads quickly won’t necessarily rank, but a page with lots of quality content that won’t load will absolutely struggle in the SERPs.

Great writing and great photography will not help you if it doesn’t load in a reasonable amount of time.

Compressing images and videos to a reasonable quality that doesn’t take too many seconds to load can help, because the size of the web page itself is highly important.

Google PageSpeed Insights is a splendid tool for diagnosing load issues. It will tell you exactly which issues are slowing your site down so you can fix them.

International Websites

Multinational companies often create duplicate pages that have been translated based on the region. This is duplicate content that is okay, but it needs to be identified to the search engines.

These Mcdonald’s slugs are an example. They have identical content, but the page has been translated for English or Spanish-speaking Americans.

- https://www.mcdonalds.com/us/en-us.html

- https://www.mcdonalds.com/us/es-us.html

These pages are fine to have, and Google won’t penalize the repeat content because it has been translated. However, the language differences need to be identified through hreflang tags. Google Search Central can walk you through this process.

Create and Maintain a Link-Worthy Site

Inbound links are huge, both for the authority they lend a site in the eyes of search engines, and for the organic traffic they can generate when people click on them.

If a site is worth linking to, then people will. And if it’s not, they usually don’t.

No matter what industry a site is centered around, it’s capable of providing value. Outside of product reviews, an ecommerce site isn’t likely to generate many inbound links if the only pages on the site are the products themselves. But if an ecommerce site writes its own product reviews, or offers valuable insights into new and exciting products, or provides “top-ten for value” lists and things of that nature – boom, that’s link-worthy.

People want insightful, exciting, authoritative content. Even if you’re trying to sell a service, rather than a product, you can still give away some of your service for free. Take this blog for instance. There is lots of valuable insight here. That’s why you’re here, right?

But while this information is valuable, it’s nothing compared to our paid content. This free blog is generating inbound traffic for us, and our sales are increasing as a result.

It’s 2021 – that means the overwhelming majority of web traffic is being conducted via telephone. In quarter one of 2021, that number was 54.8%, and that doesn’t even include tablets, according to Statista.

Since 2019, Google has been using mobile-first indexing to ensure that pages are fully optimized for the new world. However, that’s still millions of web searches per year that are happening via desktop. When designing a site, consistently look at it through both the mobile and desktop previews so that it provides a quality user experience through both lenses.

Create a Blog

Blogs are a great way to generate recurring traffic, create site hierarchy, and add authority to existing pages. Blogs can add context to existing content and use internal linking to drive traffic to your most important pages – the pages that sell products or services.

Most of a website should be somewhat evergreen. Although content should be updated from time to time, because information is always changing, the primary pages of a site should be relevant for months at a time (the slugs should be relevant much longer). Blogs are an additional resource to capitalize on additional content topics, long-tail topics, breaking news, and seasonal topics in order to gain more digital footprint in the SERPs.

The chamber of commerce holds a ribbon cutting at your newest franchise? Blog it and point it back to the primary city page. An influencer endorses a product that you sell? Blog it and point it back to the product page.

Use Keywords

Every single piece of content on your site, from the blogs to the primary pages, to the meta titles, to the image names and descriptions should be based on well-researched keywords that potential clients and customers are searching for.

Check Online Reviews

User Experience

Remember, the user experience is what SEO is all about. It’s not about impressing Google, it’s about impressing the real humans who find a site.

Fast load speeds aren’t about SERPs, they’re about making sure your customers aren’t getting frustrated and leaving before accomplishing their goal for the site.

Quality content isn’t about earning inbound links, it’s about giving your customers value and a great experience, so that they’ll keep coming back.

Even ranking in the SERPs is a part of user experience. You have a great product, and you want to get it in front of the people who need it. Every piece of SEO is all about the user experience.

Ready to Take Your Search Hustle Further?